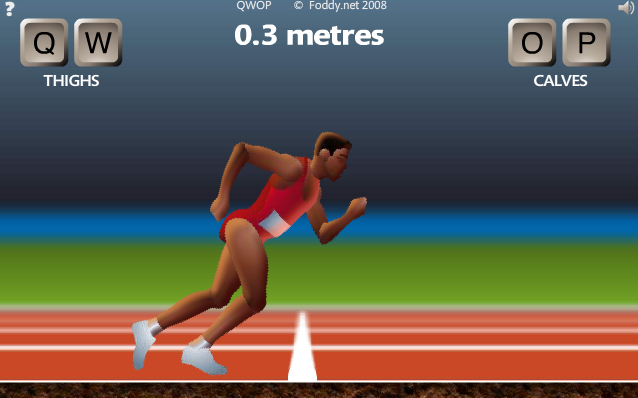

The difference is clear: the first agent is much faster, but it relies on its knees contacting the ground. QWOP is a deceptively simple game - take control of your athlete and run 100m (328ft) along a race track. Here is a video of the agent playing with a standing bonus of 0.05. Here is a video of the agent playing without a standing bonus.

In the interest of getting something cooler-looking, I tried adding a bonus that rewards the agent for keeping its knees off the ground. The agent learns the optimal "kneeling" gate, which looks lame, but I'm told is the best you can do. For example, if every worker sends an environment's frames to a different Redis channel, then a third-party can hook into one of those Redis channels and passively watch the agent play. This setup has a nice consequence: it is really easy to monitor and debug. Workers - a set of CPU instances that asynchronously run multiple environments and ask the master for actions at every timestep.With its notoriously difficult gameplay, quirky graphics, and. In this game, you will use the Q, W, O, and P keys on your keyboard to control the runners limbs and move them forward.

Master - a GPU machine that takes actions and trains an agent. Grace QWOP is a challenging online game that will test your coordination and patience as you control a runner competing in a track and field event. 2 days ago &0183 &32 Sep 6, 2023, 4:15 AM PDT 0 Comments.So, instead of focusing on these aspects, this project focuses on infrastructure and scalability. Ive trained plenty of RL agents before, and Ive even turned HTML5 games into RL environments before. Redis - used for communicating between CPU and GPU machines. This is an experiment in training an RL agent to play the famous game QWOP.Here are the components of the training system: Remote environments running on CPU-only machines.In particular, I am playing with the following ideas/technologies: The only thing that differentiates it is the speed and the feel you get for the game. The running technique isn’t so much different from the walking one. So, instead of focusing on these aspects, this project focuses on infrastructure and scalability. When you decided that it’s enough walking and you believe that you’ve mastered this technique, you can move on to running. I've trained plenty of RL agents before, and I've even turned HTML5 games into RL environments before. This is an experiment in training an RL agent to play the famous game QWOP.

0 kommentar(er)

0 kommentar(er)